How does a ‘requester’ know that a ‘submitter’ has provide a correct, complete, accurate and relevant ‘submission’? A framework to evaluate the planning, receipt, and evaluation of submissions.

What do the following three things have in common?

- An Under-eleven age soccer club opens the season’s registration.

- A government issues a request for proposal to jet fighters.

- A controller issues a corporate budget call.

All of the above are going to an environment to solicit information. Although the environments are very different, they all share this problem:

How does a ‘requester’ know that a ‘submitter’ has provide a correct, complete, accurate and relevant ‘submission’?

Although seemingly pedestrian, a question, if answered correctly, can save all three requesters considerable time, effort and aggravation.

One Size Fits All

I have run about a ‘ka-billion’ over my career and thus have an intuitive sense of the steps. Therefore, it was interesting to come up with a general framework of what you should do – sort of a one-size fits all submissions-validation processes to receive, verify, validate, correct, collate, report and certify submissions.

Okay, one size does not fit all. An U-11 soccer program does not carry similar risks or costs as a major procurement. Nevertheless, a ‘Plan-Do-Act-Check’ philosophy should prevail if for no other reason than to reduce wasted time due to poor quality data.

Submissions R’Us

There are in two distinct parts of the submission process. The first Check verifies and validates the receipt of the submissions at a non-qualitative level [1] while the second check makes the applicable selection. Using a seven-step process, this first step answers the above question:

How does a ‘requester’ know that a ‘submitter’ has provide a correct, complete, accurate and relevant ‘submission’?

Ideally this question is answered quickly or the validations are built into the submission process. By performing this first Check, organizations have assurance that the submissions are consistent, comparable and valid. The second Check is the scoring and relative ranking of the submissions (and out of scope for this blog).

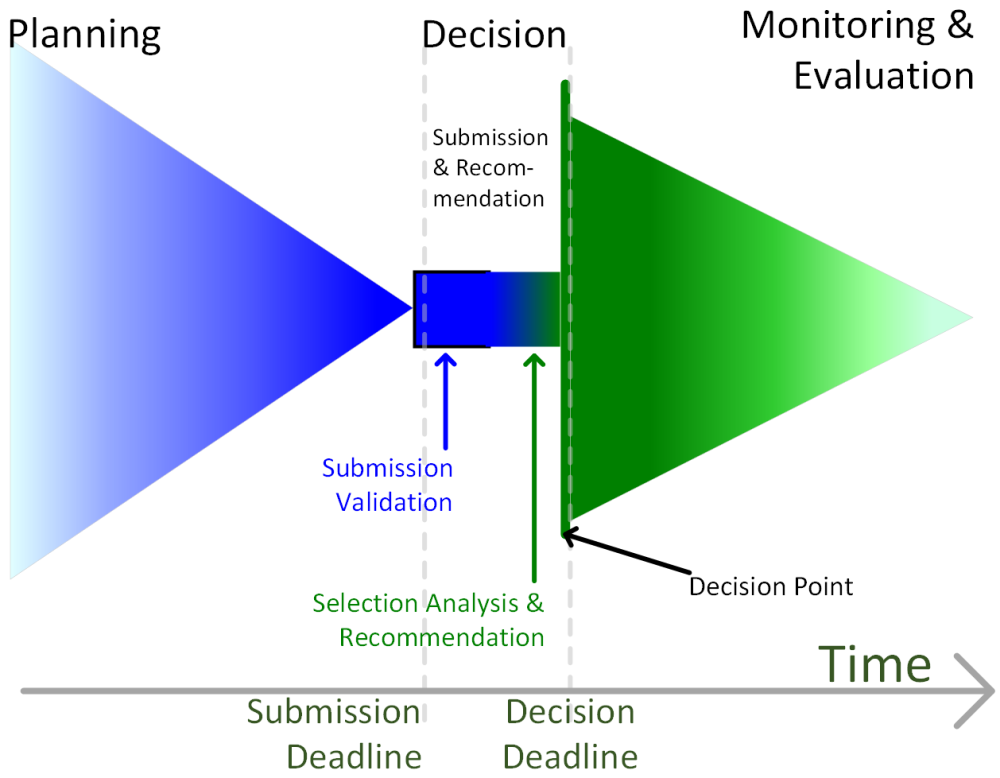

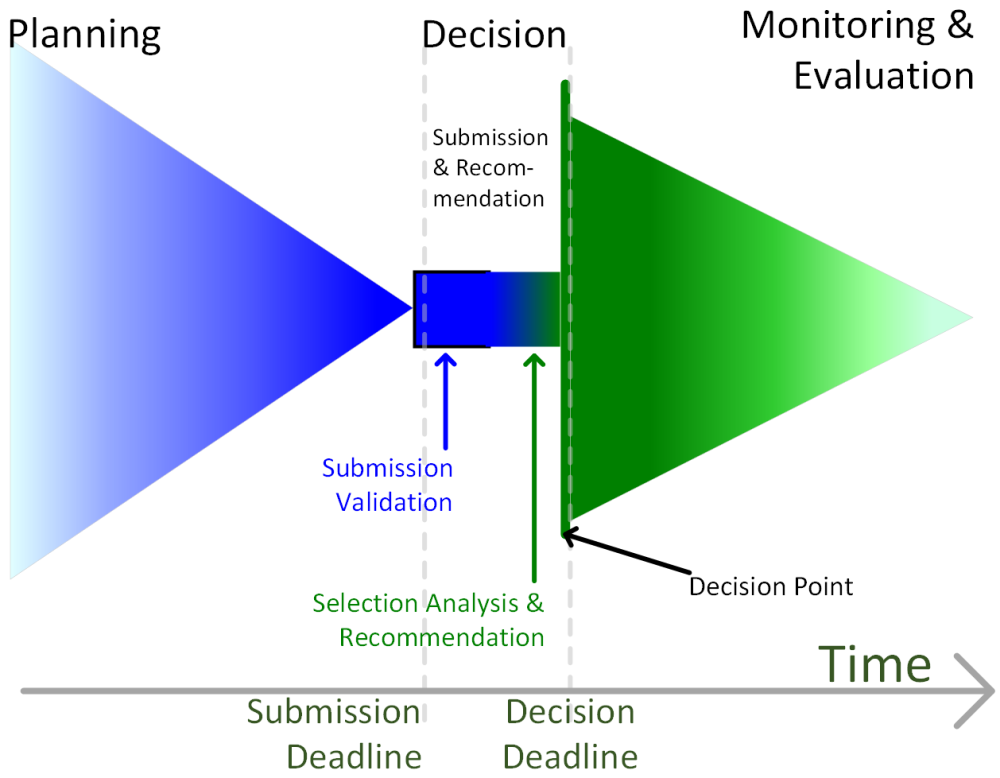

The following graphic presents these two steps and their location in the over all planning process. Note that blue areas represent planning and green areas represents report, monitoring and evaluation. If the graphic looks familiar, it is a continuation of the ‘NOW-Event Map‘ introduced a few blogs ago.

7 Steps to Submissions Happiness

- Submission Scope & Infrastructure: Submission planning for volume, actors and other environmental considerations.

- The Submission-Environment Boundary Counter: Response count and submission inventory control.

- Basic Form-Compliance: Adherence to explicit and implicit instructions related to the form and the identification of gross deviations.

- Priority/Mandatory: Completion of most critical fields and first pass qualitative analysis of the content.

- Middling-Importance: Secondary and tertiary fields completed with first pass qualitative analysis of the content.

- Residual Fields: Fields not considered in the above analysis and consideration for their importance to formulating a recommendation or inclusion in subsequent analysis.

- Evaluation Phase Hand Off: Transfer to the evaluation phase, updating as applicable meta-data. Analysis of future submissions based on the above experience and updates to applicable submission forms, instructions, etc.

Questions to consider for each step are:

- Definition: What is this step, why is it needed and any relevant examples not necessarily specific to NDA.

- Proposed Tests: Methods to complete the intended analysis.

Implicit in the following discussion is a communication process between the requester and the submitter. For example, if a submission fails the most basic validation tests, it may be returned for re-submission. Less dramatic, a submission may be returned for clarification or to improve its quality (e.g. amending a long-winded description). Organizations need to determine how formalized this communication should be. At the most basic level, a record of emails going back in forth. More formal would include un-editable comment fields and audit logs.

Step 1: Submission Scope & Infrastructure

- Definition: Submission planning for volume, actors and other environmental considerations and establishment of necessary infrastructure to support the process.

- Proposed Tests:

- Estimate the total population (N) that could potentially submit and the probability of them doing so (resulting in n).

- Determine the control formalities required for things such as communication, coordination, control or command functions relative to the population.

- As required, determine and implement training and assets to support the process.

- A Data-Dictionary can aid in establishing an expected versus result comparison.

Step 2: The Submission-Environment Boundary Counter:

- Definition: Response count and submission inventory control:

- Has every submission been received.

- Are there duplicate submissions.

- Are there any lost/limbo submissions.

- Are there any submissions received for the wrong purpose.

- Are there submitters who are either not-allowed to submit or were not expected to submit – should these submissions be accepted.

- Proposed Tests:

- Inventory control numbers are issued for self-identified submitters.

- Count of submissions is compared against the control-count.

- Informal poll with potential submitters as to their intentions.

Step 3: Basic Compliance:

- Definition: Adherence to explicit and implicit instructions related to the form and the identification of gross deviations.

- Proposed Tests:

- Scan of the submission to see that each of the fields matches the expected data type (e.g. a phone number is only numeric).

- Average field length is within a tolerance range (e.g. an address is < 100 characters).

- NULL values are found for only fields in which information is optional.

- Perform a manual scan of the submission-files, ideally with a check list.

- Move content into the central database and establish a data-connection so as to perform the queries in Excel or MS Access (or other readily available reporting tools).

- Create form/file level data entry validation.

Step 4: Priority/Mandatory:

- Definition: Completion of most critical fields and first pass qualitative analysis of the content.

- Proposed Tests:

- Administrative fields: Project name, submitter name, etc. are spelled consistently correctly, completed accurately and meets data standards.

- Top Priority fields: Excluding the above administrative fields, for the top (n) fields, perform a quality check.

- This may be an external benchmark comparison (e.g. a description maximum 500 characters and in the active voice) or an inter-submission comparison (e.g. reviewing averages, minimum and maximums).

- Compare submissions to expected results described in the data-dictionary,

- Verify that the submissions are of sufficient quality to proceed further.

Step 5: Middling-Importance:

- Definition: Secondary and tertiary fields completed with first pass qualitative analysis of the content.

- Proposed Tests:

- Similar to mandatory fields but with less diligence as applicable.

- If the submission template and definitions are new, it is possible that a field may be promoted or demoted.

Step 6: Residual Fields:

- Definition: Fields not considered in the above analysis.

- Proposed Tests:

- Determine if the field is to be evaluated, if so, promote it to one of the above 2 categories (or create an additional category, e.g. for future consideration).

- If not evaluated, why was this information requested, can it be ignored for evaluation purposes or be dropped from future data collection.

Step 7: Evaluation Phase Hand Off:

- Definition: Transfer to the evaluation phase, updating as applicable meta-data. Analysis of future submissions based on the above experience and updates to applicable submission forms, instructions, etc.

- Proposed Tests:

- Either en masse or individually, conduct a Go/No-Go assessment whether the information is of suitable quality for evaluations.

- The acceptance process may involve a formal certification process or be informal.

- Communicate the results to the submitters as applicable.

I Submit to Your Validation

The above steps need to be considered in context. An U-11 soccer registration may require negligible infrastructure and other than the kids name and age, very little addition verification or validation. A major procurement will have time-stamped receipt of submissions, may require significant infrastructure to manage and detailed validation of information.

In other words, the above steps and model are a starting point and heuristic to design and scale optimally. Please note that the above exclude the actual evaluation, things such as scoring or ranking of submissions.

Thoughts, have I missed an important step, any that you would combine? As always, drop me a note with your thoughts.

Notes and References

[1] Note, technically this procedure is performing both a verification (meeting requirements) and validation (meeting the requirements of intended use), see ISO-9000 Quality Management vocabulary, 3.8.4 & 3.8.5. For brevity, these have been combined under a single description: ‘validation’.